In a groundbreaking move that promises to reshape the landscape of artificial intelligence (AI), Microsoft and Mistral AI have announced a new partnership aimed at accelerating AI innovation and making the Mistral Large model available first on Azure. This collaboration marks a pivotal moment for both tech giants, as they leverage their strengths to push the boundaries of AI technology and offer groundbreaking solutions to customers worldwide.

A Shared Vision for the Future of AI

At the heart of this partnership is a shared vision between Microsoft and Mistral AI, focusing on the development of trustworthy, scalable, and responsible AI solutions. Mistral AI, known for its innovative approach and commitment to the open-source community, finds a complementary partner in Microsoft, with its robust Azure AI platform and commitment to developing cutting-edge AI infrastructure.

Eric Boyd, Corporate Vice President at Microsoft, emphasizes the significance of this partnership, stating, "Together, we are committed to driving impactful progress in the AI industry and delivering unparalleled value to our customers and partners globally."

Unleashing New Possibilities with Mistral Large

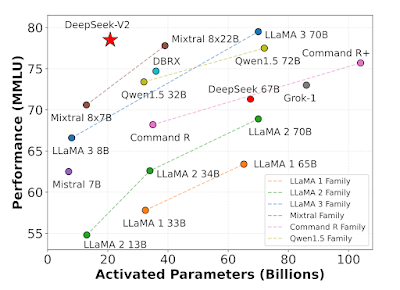

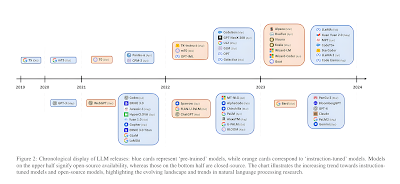

Mistral Large stands at the forefront of this partnership—a state-of-the-art large language model (LLM) that boasts exceptional reasoning and knowledge capabilities. Its proficiency in multiple languages, including French, German, Spanish, and Italian, along with its ability to process extensive documents and excel in code and mathematics, positions Mistral Large as a versatile tool capable of addressing a wide range of text-based use cases.

The integration of Mistral Large into Azure's AI model catalog, accessible through Azure AI Studio and Azure Machine Learning, represents a significant expansion of Microsoft's offerings, providing customers with access to a diverse selection of the latest and most effective AI models.

Empowering Innovation Across Industries

The collaboration between Microsoft and Mistral AI is not just about technology; it's about the tangible impact this partnership can have across various sectors. Companies like Schneider Electric, Doctolib, and CMA CGM have already begun to explore the capabilities of Mistral Large, finding its performance and efficiency to be transformative for their operations.

Philippe Rambach, Chief AI Officer at Schneider Electric, noted the model's exceptional performance and potential for enhancing internal efficiency. Similarly, Nacim Rahal from Doctolib highlighted the model's effectiveness with medical terminology, underscoring the potential for innovation in healthcare.

A Foundation for Trustworthy and Safe AI

Beyond the technological advancements, this partnership underscores a mutual commitment to building AI systems and products that are trustworthy and safe. Microsoft's dedication to supporting global AI innovation, coupled with its efforts to develop secure technology, aligns perfectly with Mistral AI's vision for the future.

The integration of Mistral AI models into Azure AI Studio ensures that customers can leverage Azure AI Content Safety and responsible AI tools, enhancing the security and reliability of AI solutions. This approach not only advances the state of AI technology but also ensures that its benefits can be enjoyed responsibly and ethically.

Looking Ahead

As Microsoft and Mistral AI embark on this exciting journey together, the possibilities seem endless. This partnership is more than just a collaboration between two companies; it's a beacon for the future of AI, signaling a new era of innovation, efficiency, and responsible technology development. With Mistral Large leading the way, the future of AI looks brighter and more promising than ever.